The Security Theater in Test Automation: When Log Masking Meets Public Cloud Testing

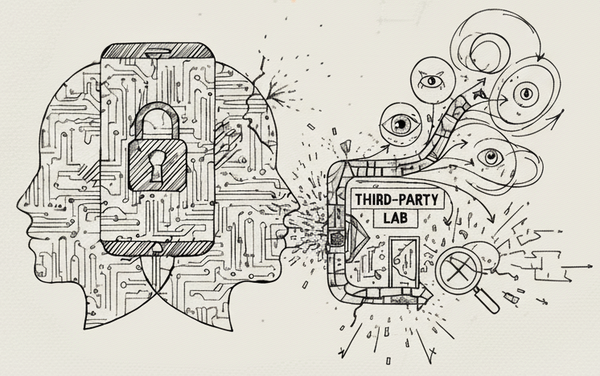

Appium 3.0 celebrates log masking while teams upload entire banking apps to public clouds. The irony? Device labs read your passwords in plain text before "masking" them for you. Welcome to security theater in test automation.

The Irony We Need to Talk About

The test automation community just witnessed a fascinating display of security theater with Appium 3.0's release. The headline feature that everyone's celebrating? Sophisticated log masking for sensitive data. But here's the reality nobody wants to discuss: most companies implementing this feature are simultaneously running their tests on public cloud devices, sending their entire application data through third-party infrastructure.

Let me paint you the picture of this absurdity.

The Grand Announcement: "We Care About Your Security"

Appium 2.18.0 and beyond now offers what they call an elegant solution to mask sensitive values in logs. The documentation proudly declares that "it is the right call to hide sensitive information, like passwords, tokens, etc. from server logs, so it does not accidentally leak if these logs end up in wrong hands."

The implementation is straightforward enough. You wrap sensitive values with logger.markSensitive(), add a custom header to your requests, and you're done. It's maybe a few minutes of work per logging statement. Simple change, minimal effort.

And that's what makes this even more absurd. They're promoting this trivial security measure as a major feature, while everyone implementing it is simultaneously doing something monumentally insecure.

Meanwhile, in the Real World...

While engineering teams quickly implement log masking, making these trivial code changes to protect passwords in log files, they're uploading their entire application to public device labs. Whether it's BrowserStack, Sauce Labs, AWS Device Farm, Firebase Test Lab, or any of the dozen other providers, the story is the same.

Think about that for a moment. You're carefully masking a password in a log file that sits on your local machine or your own CI/CD server, but you're perfectly comfortable uploading your entire APK or IPA file to servers you've never seen, owned by companies whose primary obligation is to their shareholders, not your security.

Your "secured" test is running on shared infrastructure provided by BrowserStack, Sauce Labs, AWS Device Farm, Firebase Test Lab, or any of the dozen other providers in this space. Your entire application binary, containing all your API keys, endpoints, and certificates, lives on someone else's computer. Every API call your app makes routes through someone else's network. Your authentication tokens exist in someone else's memory. Your test data resides on devices you'll never physically control, in data centers you'll never visit, managed by people you'll never meet.

But hey, at least your local Appium logs are masked! That's what matters, right?

The Data Flow Reality Check

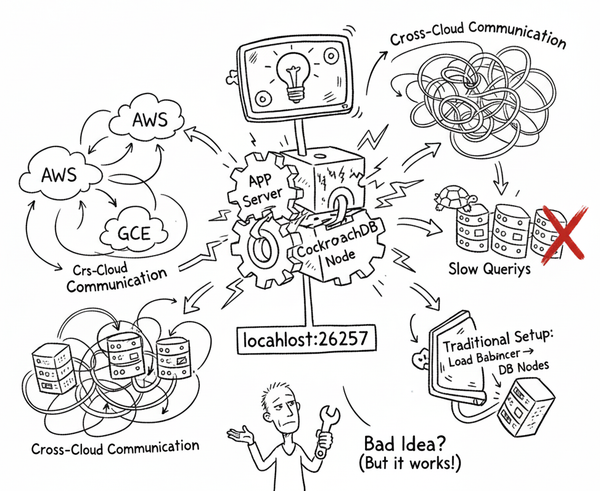

Let's trace what actually happens when you run one of these "secure" tests with beautifully masked logs. First, you upload your entire application binary to a third-party service. This binary contains everything—your API endpoints, your certificate pins, your obfuscated (but not really) API keys. It's all there, packaged up nicely and sent to someone else's servers.

Next comes the installation phase. Your app gets installed on a device you don't own, in a data center you can't access, on infrastructure you don't control. Then execution begins. Your app starts making API calls that traverse the device's network stack, controlled entirely by the provider. These calls go through the provider's proxy servers, where they can inspect every byte of traffic. They hit the provider's logging infrastructure long before your fancy masks even apply.

All your test data, user credentials, and API responses sit in device memory accessible to the provider, in network logs captured before your masking, in the provider's debugging tools that are recording everything.

But here's the kicker that exposes the whole charade: the public device lab providers themselves aren't masking data by default. They first read and parse every Appium command you send in plain text. They analyze what command is being executed, what parameters are being passed, what data is flowing through. Only then, after they've already seen everything, do they decide whether to apply masking in the logs they show you.

Think about that. The sensitive data you're so carefully masking? Every major cloud testing provider sees it. They have to see it to execute your tests. The masking happens after they've already processed your plain text commands. They're essentially saying, "Don't worry, we'll hide your passwords... after we've read them, stored them in our systems, and passed them through our infrastructure."

Your carefully masked logs are protecting you from... yourself, basically. The third-party providers are like the magician's assistant who's already seen how the trick works but promises to act surprised for the audience. It's like putting on a blindfold while someone else drives your car – sure, you can't see where you're going, but the driver sees everything.

The Contradictions Playing Out Everywhere

Picture this scenario that's happening in financial services companies right now. Teams spend maybe an afternoon implementing log masking for their test suites. Every password, every account number, every transaction ID gets wrapped in markSensitive(). Such a simple change! Security teams sign off. Compliance departments are thrilled. Everyone celebrates this "achievement" in protecting customer data.

These same teams then upload their banking apps to public device labs to test on 50 different devices. The entire app binary gets analyzed by the provider's systems. All network traffic is proxied through third-party infrastructure. Test accounts with real API access are used because "the test environment needs to be realistic." Session tokens are visible in device memory that cloud providers control entirely.

The justification? "But they're SOC 2 compliant." As if that somehow negates the fact that entire applications are being handed over to third parties. The providers read all test commands in plain text before deciding what to mask. The logs users see are masked, yes. After the provider has already processed everything in plain text.

Or consider what's happening in healthcare companies implementing Appium's new security features. They're masking patient IDs, medical record numbers, and prescription data in all logs. HIPAA compliance officers are impressed. Meanwhile, these same companies are running tests containing PHI test data through public cloud testing infrastructure. Medical records APIs are being called from external devices. Authentication flows are happening on shared hardware. Compliance requirements are essentially being waived because "it's just testing."

The cognitive dissonance is staggering.

The Vendors' Convenient Omission: They See Everything First

Here's the dirty secret that device lab providers don't advertise: log masking on their platforms is theater performed after the show is over. When you send a command like driver.findElement(By.id("password")).sendKeys("SuperSecret123"), that command travels to their servers in plain text. Their systems parse it, read it, execute it, and then – only then – decide if they should mask "SuperSecret123" in the logs they return to you.

This isn't speculation. This is how it has to work. The provider's infrastructure must read and understand your Appium commands to execute them. They must process your sendKeys values to type them into the device. They must handle your API endpoints to route the traffic. The masking is applied to the output they show you, not to what they see and process internally.

Cloud testing providers have mastered the art of security theater. They market "enterprise-grade security" and now they'll probably add "supports Appium 3.0 log masking" to their feature lists. What they won't tell you is that they're the ones doing the masking after already seeing your data. How many employees have access to your running tests before the masking is applied? They won't tell you. What happens to the pre-masked data in their internal systems? "Proprietary process," they say. Whether your plain text passwords are logged in their internal infrastructure before they apply the mask for your viewing comfort? "We take security seriously" is not an answer.

Instead, their security pages focus on the theatrical elements. They have SOC 2 compliance, which sounds impressive but doesn't prevent data access. They use encrypted connections, which they decrypt at their end anyway. They provide "isolated" test environments that are still on their infrastructure. They promise clean devices but won't specify if that means memory-wiped at the hardware level or just a factory reset that any digital forensics expert could recover data from.

The Community's Cognitive Dissonance

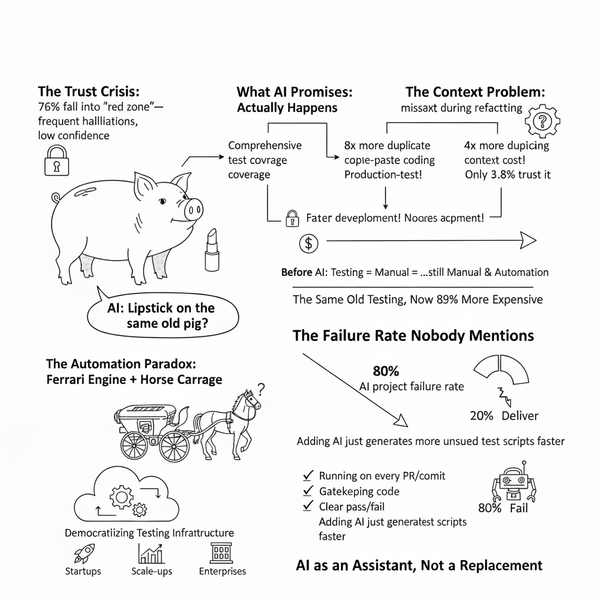

The test automation community has developed a remarkable ability to hold two contradictory beliefs simultaneously. We celebrate security features like log masking as major advances. We write blog posts about the importance of protecting sensitive data. We attend conferences where speakers emphasize security best practices. We implement elaborate schemes to hide passwords in our log files.

At the same time, we advocate for cloud-based testing as a "best practice." We share credentials for public device farms in our documentation. We create "secure" test frameworks that are designed to run on insecure infrastructure. We upload our production apps to third-party services and call it professional testing.

It's like a community of locksmiths who spend their days crafting elaborate locks for their front doors while leaving their windows wide open and posting their addresses online.

What This Really Means

This isn't about Appium doing something wrong. Log masking is genuinely useful for local testing and CI/CD pipelines you control. This is about the automation industry's refusal to acknowledge the massive elephant in the room. You cannot claim to prioritize security while running your tests on infrastructure you don't control. It's not a nuanced position. It's not a trade-off. It's a contradiction.

The harsh truth is that most companies have already decided that convenience matters more than security. And that's fine! It's a valid business decision. What's not fine is pretending otherwise. What's not fine is implementing elaborate security theater to make ourselves feel better while ignoring the actual vulnerabilities.

The Honest Questions We Should Be Asking

Why are we making these trivial log masking changes when the entire application is exposed on third-party devices? It's a question that makes people uncomfortable because the answer is obvious: we're doing it to check a box, to feel like we're "doing security," to have something to point to when auditors ask about our security practices. It takes five minutes to implement and gives us a year's worth of security theater talking points.

Who actually has access to the test devices while our apps are running? The vendors won't give you a straight answer because the truth is uncomfortable. It's not just the engineers. It's support staff, it's contractors, it's potentially anyone with the right access level in their organization.

What guarantees exist that our app binaries aren't being analyzed or stored? None. There are promises, there are legal agreements, there are SOC 2 certifications, but there are no guarantees. You are trusting a for-profit company with your entire application.

How is device memory really handled between test sessions? Another question that gets vague answers because the real answer would make security-conscious developers very uncomfortable.

Why do we accept this double standard as an industry norm? Because we've collectively decided that the convenience of not maintaining our own device labs is worth the security risk. We just don't want to admit it out loud.

A Call for Intellectual Honesty

The automation community needs to stop pretending that log masking and public cloud testing can coexist in a "secure" testing strategy. We need to choose. Either admit that security isn't actually a priority and embrace the convenience of cloud testing without the security theater, or actually prioritize security by investing in on-premise device labs and truly isolated test environments.

But please, let's stop the charade of implementing elaborate log masking schemes while uploading our entire applications to devices we'll never see, in data centers we'll never visit, controlled by companies whose primary interest is not our security but their profit margins.

The Path Forward

If you genuinely care about test security, you have three honest options.

The first is full commitment to security. Build on-premise device labs. Use dedicated test infrastructure. Implement true network isolation. Control the entire test pipeline. Yes, it's expensive. Yes, it's complex. But it's the only way to actually achieve the security you're pretending to have with log masking.

The second is honest risk acceptance. Acknowledge the security trade-offs openly. Document the risks for stakeholders. Use test-only environments and data that have no connection to production. Stop pretending log masking solves the problem when you're uploading everything anyway.

The third is a hybrid reality that acknowledges the nuances. Use public clouds only for non-sensitive apps. Keep sensitive testing on-premise. Be transparent about where the security boundaries actually are. Don't waste time masking logs for apps you're uploading to third parties anyway.

Conclusion: The Emperor's New Logs

Appium's log masking feature is like putting a privacy screen on your phone while live-streaming your screen to YouTube. But it's worse than that – it's like YouTube applying the privacy filter to the video they show you while keeping the original unfiltered stream for themselves. The public device labs see everything in plain text, process it, execute it, and then thoughtfully mask it in the logs they return to you. How considerate!

The test automation industry needs to have an honest conversation about security. We need to stop celebrating incremental security features while ignoring the massive security holes in our standard practices. We need to acknowledge that when we upload our apps to public device clouds, we're not just making a conscious choice to prioritize convenience over security – we're literally sending them our passwords in plain text and then celebrating when they promise to hide those passwords in the logs they show us.

The masking isn't protecting your data from the device lab providers. They've already seen it. They had to see it to run your tests. The masking is just preventing you from seeing your own passwords in your own logs, after the horse has already left the barn, toured the countryside, and set up residence in someone else's stable.

Until we can have that honest conversation, we're just masking our logs while exposing our souls. We're putting locks on doors that nobody uses while leaving the windows wide open. We're participating in an elaborate performance where everyone knows the truth but nobody wants to say it out loud.

The emperor has no clothes, but at least his logs are masked.

What's your take? Are you masking logs while testing on public clouds? Let's have an honest discussion about security theater in test automation. Reach out at lets-talk@devicelab.dev to share your thoughts.

About DeviceLab.dev: Build your own private device lab using your team's Android & iOS devices distributed globally. Unlike public clouds that process your data on their servers, DeviceLab uses peer-to-peer secure connections where your test data streams directly between your trusted nodes. Zero central data storage, local command processing, no test code changes needed. Your apps never leave your control. Learn more at devicelab.dev.

*AI was used to help structure this post