76% of Developers Don't Trust AI Tests—Here's Why They're Right

The AI testing market will reach $3.8 billion by 2032, yet 96.2% of developers won't ship AI tests without human review. Here's why AI testing is the emperor with no clothes—and what actually works.

This post was inspired by Vivek Upreti's thought-provoking LinkedIn discussion about whether AI is fundamentally changing testing or just adding cosmetic improvements to existing approaches.

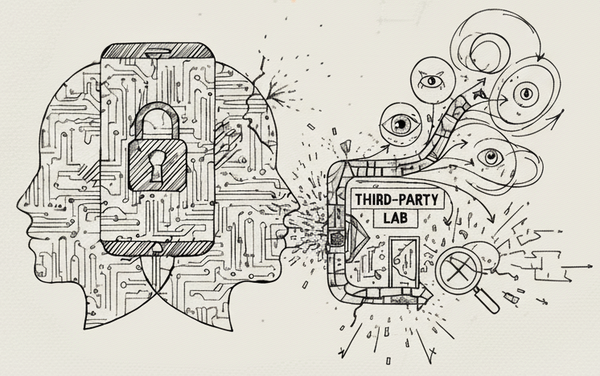

The Trust Crisis Nobody Wants to Talk About

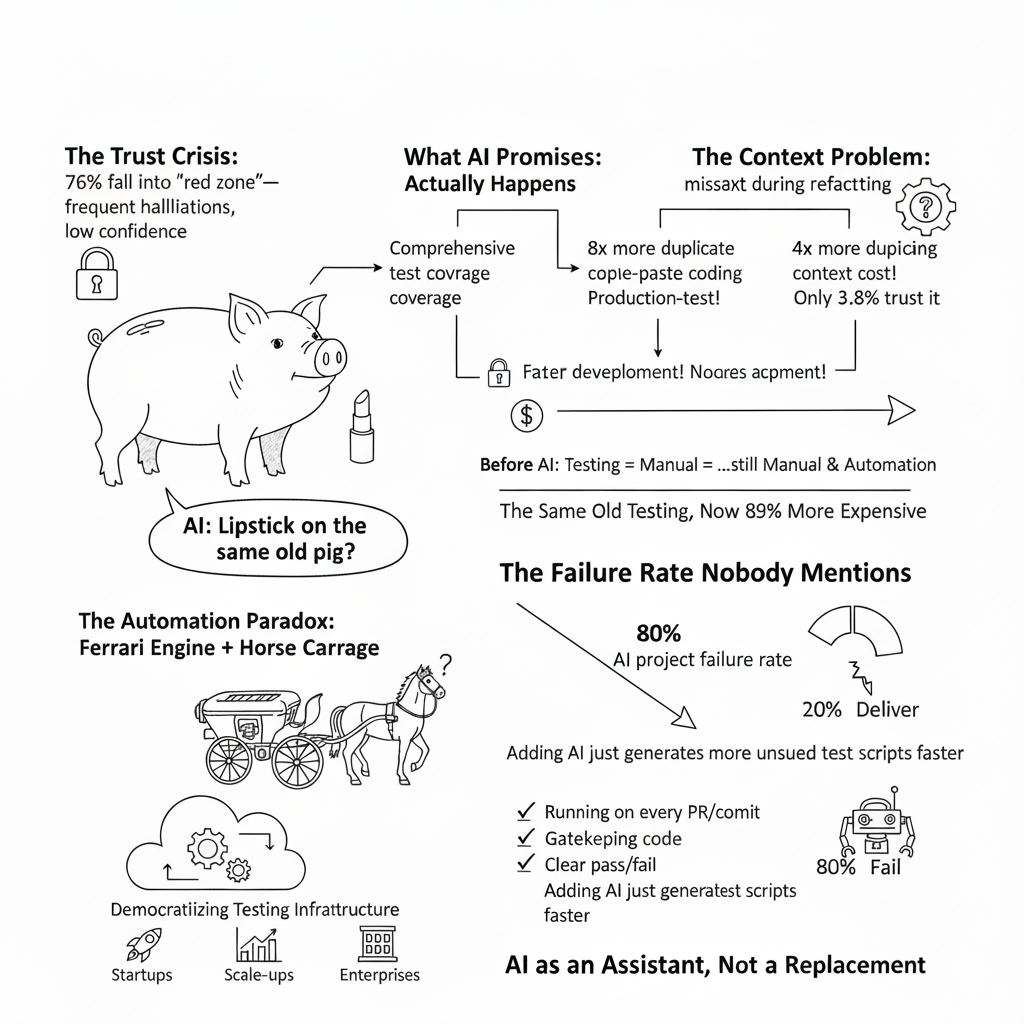

Vivek Upreti recently posed a provocative question: "Is AI just lipstick on the same old pig?"

After digging into the data, I found something that should alarm every CTO betting on AI testing: 76% of developers fall into what researchers call the "red zone"—experiencing frequent hallucinations and having low confidence in AI-generated code.

This isn't a small sample or anecdotal evidence. This is the overwhelming majority of your engineering team telling you they don't trust the tools you're pushing on them. And after years in the testing infrastructure space, I can tell you—they're absolutely right to be skeptical.

Why Developers Are Right Not to Trust AI Tests

The Context Problem That Breaks Everything

The #1 issue developers report with AI testing isn't hallucinations—it's missing context:

- 65% report missing context during refactoring

- ~60% report missing context during test generation and code review

Think about that. The majority of AI test failures happen because the AI doesn't understand what it's actually testing. It's like asking someone to proofread a document in a language they don't speak—they might catch obvious typos, but they'll miss everything that actually matters.

The Quality Metrics Are Damning

Here's what happens when developers actually use AI for testing:

| What AI Promises | What Actually Happens |

|---|---|

| "46% code completion rate!" | Only 30% gets accepted |

| "Comprehensive test coverage!" | 8x more duplicate code |

| "Faster development!" | 4x more copy-paste coding |

| "Production-ready tests!" | Only 3.8% trust it without review |

The Bottom Line: 96.2% of developers won't ship AI-generated tests without human review. That's not automation—that's just expensive assisted typing.

The Same Old Testing, Now 89% More Expensive

Here's the timeline we're living:

Before AI: Testing = Manual & Automation

After AI: Testing = …still Manual & Automation

Fifteen years. Hundreds of "revolutionary" tools. Billions in funding. And we've circled right back to where we started, except now we can say "AI-powered manual testing" with a straight face.

The Reality Check: AI Testing in 2025

Let me share some data that should concern anyone betting their quality strategy on AI:

Adoption vs. Reality

According to 2024 industry reports:

- 79% of large companies have adopted AI-augmented testing

- 68% have already encountered performance, accuracy, and reliability issues

- 60% of organizations still aren't using AI for testing, despite the hype

- By 2027, Gartner predicts 80% of enterprises will integrate AI testing tools, up from just 15% in 2023

Yet here's the disconnect: while adoption is soaring, satisfaction is plummeting.

The Failure Rate Nobody Mentions

The numbers are stark:

80% — AI project failure rate, almost double corporate IT project failures from a decade ago

20% — AI projects that actually deliver on their promise (Gartner)

53% — Enterprise AI projects that make it from prototypes to production

100% — CEOs surveyed who've been forced to cancel or delay at least one AI initiative due to costs

The Real Problem Isn't AI—It's Our Testing Philosophy

Let me be clear: AI isn't the villain here. The problem is that we're using advanced technology to accelerate outdated practices. It's like putting a Ferrari engine in a horse carriage and wondering why we're not winning Formula 1 races.

The Automation Paradox

Here's what most teams get wrong about automation, with or without AI:

If you have automated test cases that aren't:

- ✅ Running on every PR/commit

- ✅ Gatekeeping whether code is ready for review

- ✅ Providing clear pass/fail signals for deployment decisions

Then you're not utilizing automation—you're just collecting test scripts like Pokemon cards.

Adding AI to this broken system doesn't fix it. It just helps you generate more unused test scripts faster.

The Hidden Cost of AI-Generated Testing

The industry sells a seductive vision: AI will generate comprehensive test coverage automatically. Let's break down the reality with data from 2024-2025:

The Trust Crisis: When AI Tests Fail

According to recent research, 76% of developers fall into the "red zone"—experiencing frequent hallucinations and having low confidence in AI-generated code. This isn't just about test generation; it's about fundamental trust.

The top issue developers face:

- 65% report missing context during refactoring

- ~60% report missing context during test generation and code review

Without proper context, AI-generated tests often test the wrong things or miss critical edge cases entirely.

The Quality Paradox

Here's what the data reveals about AI-generated code quality:

| Metric | Reality |

|---|---|

| GitHub Copilot code completion rate | 46% |

| Code actually accepted by developers | 30% |

| Code duplication increase in 2024 | 8x |

| AI-linked code cloning increase | 4x |

| Developers with low hallucinations AND high confidence | 3.8% |

The Cost Explosion Nobody Talks About

The financial reality is sobering. The global AI-enabled testing market reached $856.7 million in 2024 and is projected to grow to $3,824.0 million by 2032—but where is all this money going?

Here's the math that vendors won't show you:

1. AI accelerates development → Developers produce more PRs than ever

2. More PRs → More test runs needed

3. AI generates more tests → Each test run becomes more expensive

4. Cloud infrastructure costs → 89% increase expected (2023-2025)

Real Infrastructure Example: For a medium-sized NLP project:

- 4 x EC2 p3.8xlarge GPU instances for training

- Cost: Thousands per month

- Plus: Storage and networking costs

- Multiply by: Every PR, every test suite, every deployment

Companies betting on "just run everything" quickly discover their testing costs can exceed their entire development budget.

The Infrastructure Trap

The typical enterprise response? "We'll build our own testing infrastructure to control costs."

This is where good companies go to die.

Suddenly, instead of building your product—the thing that actually generates value—you're:

- 🔧 Managing device farms

- 🔧 Building test orchestration systems

- 🔧 Maintaining CI/CD pipelines

- 🔧 Debugging infrastructure issues

- 🔧 Hiring DevOps engineers instead of product engineers

You've transformed from a product company into an accidental infrastructure company. And trust me, unless you're Google or Facebook, you're not going to out-infrastructure the companies whose entire business model is infrastructure.

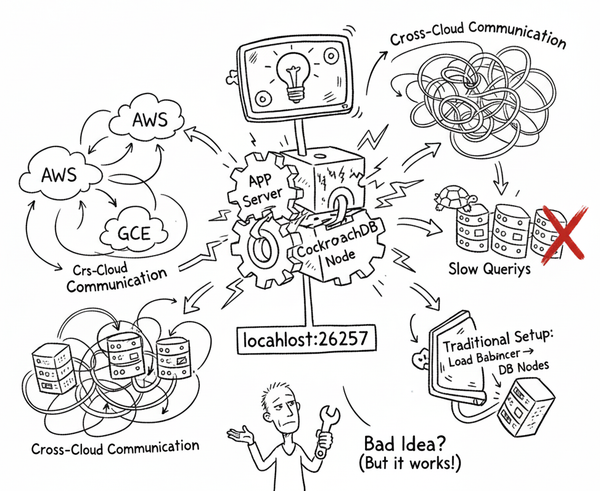

A Different Path: Democratizing Testing Infrastructure

This is why we're betting on a different approach: Democratizing Mobile Device Labs.

The future of testing isn't about AI magically solving all our problems. It's about making enterprise-grade testing infrastructure accessible to teams without enterprise-grade budgets or complexity.

What This Actually Means

🚀 For Startups: Access to the same testing capabilities as Fortune 500 companies without hiring a DevOps team.

📈 For Scale-ups: Focus engineering resources on product development, not infrastructure maintenance.

🏢 For Enterprises: Reduce infrastructure overhead while maintaining quality standards.

The Testing Future We Actually Need

Here's what the next decade of testing should look like:

1. Smart, Not More

We don't need more tests. We need smarter test selection:

- Risk-based testing that knows what to run when

- Intelligent test impact analysis

- Predictive failure detection

2. Infrastructure as a Given, Not a Project

Testing infrastructure should be like electricity—you plug in and it works. No company builds their own power plant to run their computers.

3. AI as an Assistant, Not a Replacement

AI should help us:

- Identify what needs testing (not generate random tests)

- Predict where failures are likely (not create more noise)

- Optimize test execution (not just create more tests)

4. Cost-Conscious Quality

Every test should justify its existence:

- What risk does it mitigate?

- What's the cost of running it?

- What's the cost of not running it?

The Bottom Line

We're at an inflection point. We can either:

Option A: Keep putting lipstick on the pig—using AI to do the same things faster and more expensively.

Option B: Rethink the fundamentals—what needs testing, when it needs testing, and how to make quality accessible without breaking the bank.

The companies that thrive in 2035 won't be the ones with the most AI-generated tests. They'll be the ones who figured out how to maintain quality without drowning in complexity or costs.

Because at the end of the day, customers don't care if your tests are AI-powered. They care if your product works.

What's your take? Are we using AI to solve the right problems in testing, or are we just automating our way into more expensive versions of the same issues?

If you're interested in learning more about democratizing testing infrastructure, let's connect. We're building something different, and we'd love to show you what's possible when you stop fighting infrastructure and start focusing on quality.

Learn more about how we're democratizing mobile testing infrastructure at DeviceLab - where you can build your own secure, distributed device lab without the enterprise-grade costs.

Note: AI was used to help structure and format this post.

Data Sources

- 76% of developers in "red zone" with AI code: Qodo's State of AI Code Quality Report 2025

- 79% AI testing adoption, 68% encountering issues: Leapwork's AI Testing Tools Report 2024

- 80% AI project failure rate: Harvard Business Review

- $856.7M to $3.8B market growth: Fortune Business Insights - AI-enabled Testing Market

- 8x code duplication increase: GitClear Report via DevClass

- 30% Copilot acceptance rate: AI-Generated Code Statistics 2025

- 89% computing cost increase: DDI Development - AI Cost Analysis

- 53% prototype to production rate: ITRex Group AI Implementation Study

- Gartner 80% adoption by 2027: Tricentis AI Testing Trends